AdaptSLAM, our recent work led by Ying Chen, explores new approaches for adapting edge computing-supported Visual and Visual-Inertial Simultaneous Localization and Mapping (V- and VI-SLAM) to computation and communication resource constraints.

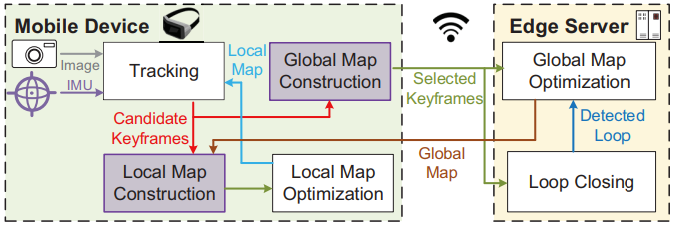

Adapt SLAM’s system architecture. Our design centers on the two highlighted modules. We optimize our algorithms to run in real time on mobile devices.

Recent work has demonstrated notable advantages to offloading computationally expensive parts of SLAM pipelines to an edge server. Question remains, however, as to how to do this best, in different conditions. We propose a method that quantifies the value of each keyframe, in terms of information it adds to the constructed map. This is the first theoretically grounded method for building the local and global maps of limited size, and minimizing the uncertainty of the maps, that is laying the foundation for the optimal adaptive offloading of SLAM tasks under the communication and computation constraints.

Ying will be presenting AdaptSLAM at IEEE INFOCOM in May 2023 [Paper PDF]. Ying will also be presenting a demo based on AdaptSLAM, titled SpacecraftWalk: Demonstrating Resource-Efficient SLAM in Virtual Spacecraft Environments [Demo PDF]. AdaptSLAM code is publicly available.

This work is supported by NSF grants CSR1903136, CNS-1908051, and CNS-2112562, NSF CAREER Award IIS-2046072, by an IBM Faculty Award, and by the Australian Research Council under Grant DP200101627. SpacecraftWalk is additionally supported by NASA SBIR under Contract No 80NSSC22PB105.