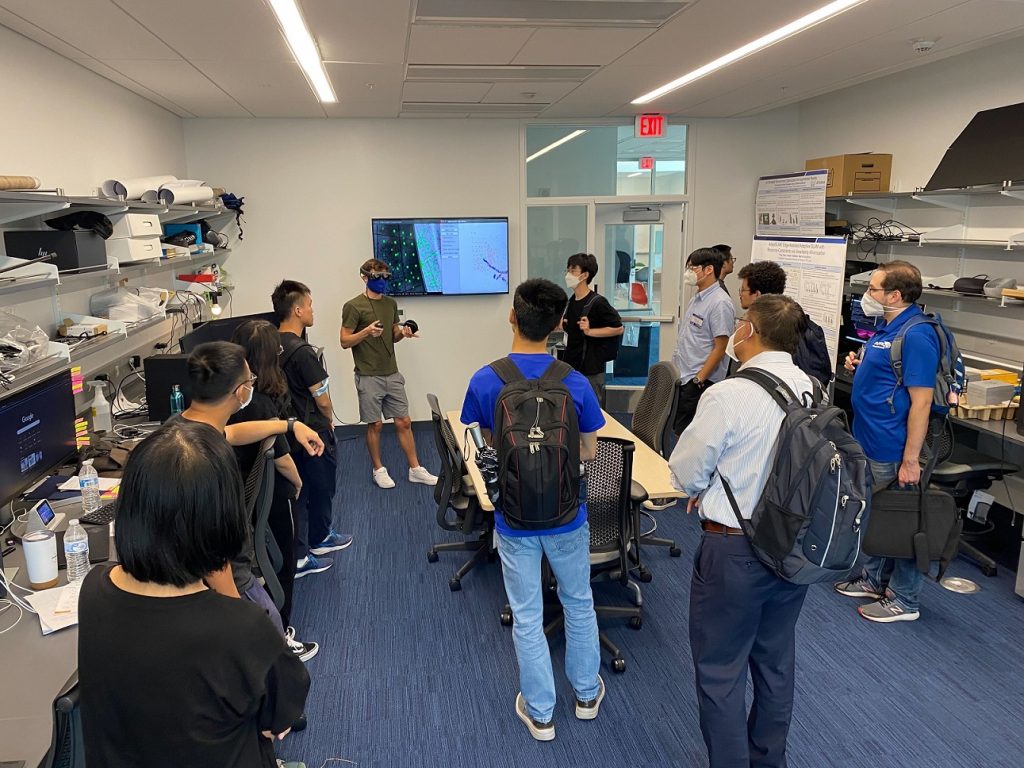

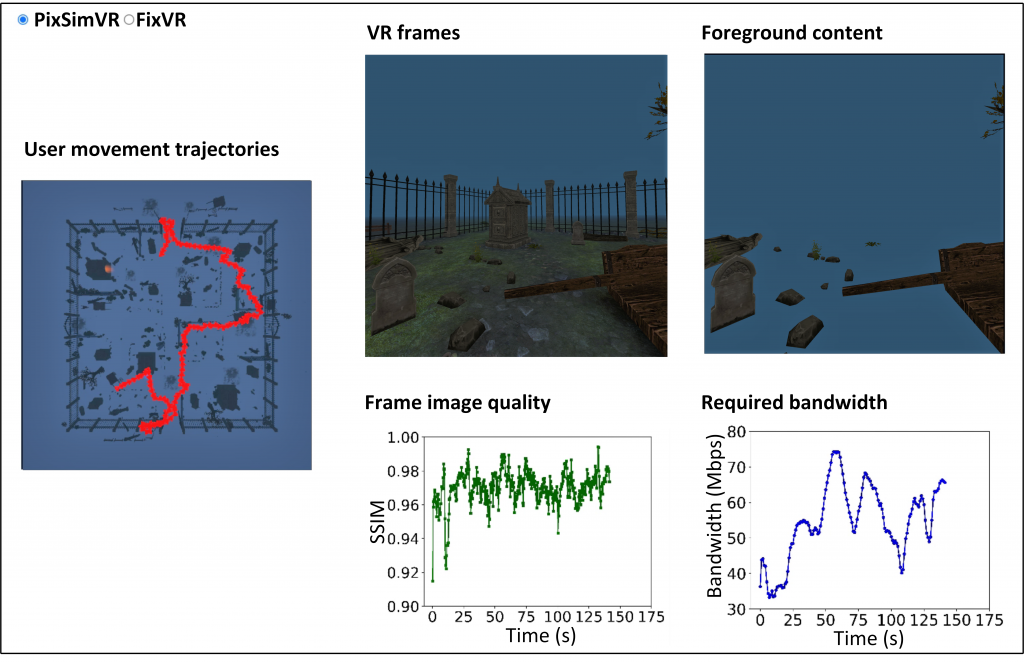

Duke University I3T Lab is looking for a postdoc in the area of edge computing-supported context-aware augmented reality. The postdoc will work closely with PI Gorlatova and two very capable and driven ECE and CS PhD students, and will have opportunity to participate in the activities of the NSF AI ATHENA Institute for Edge Computing Leveraging Next Generation Networks.

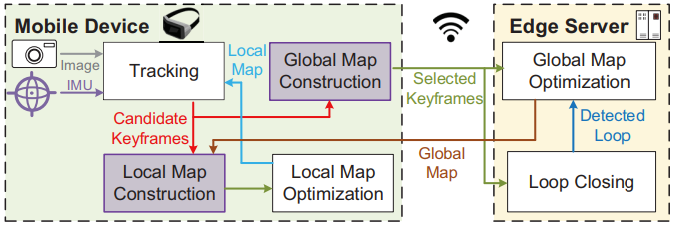

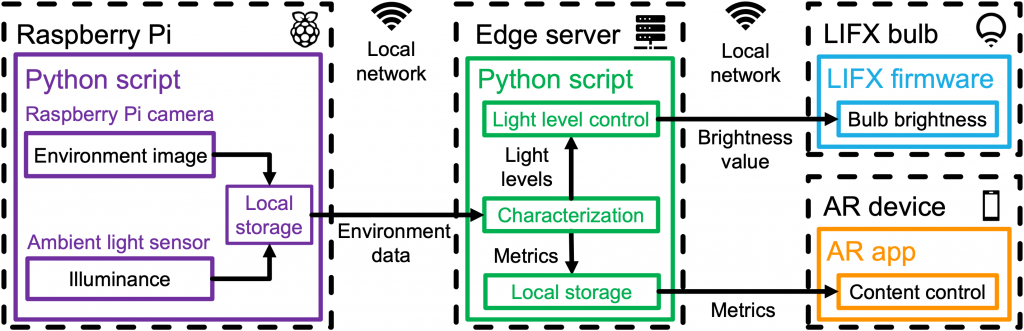

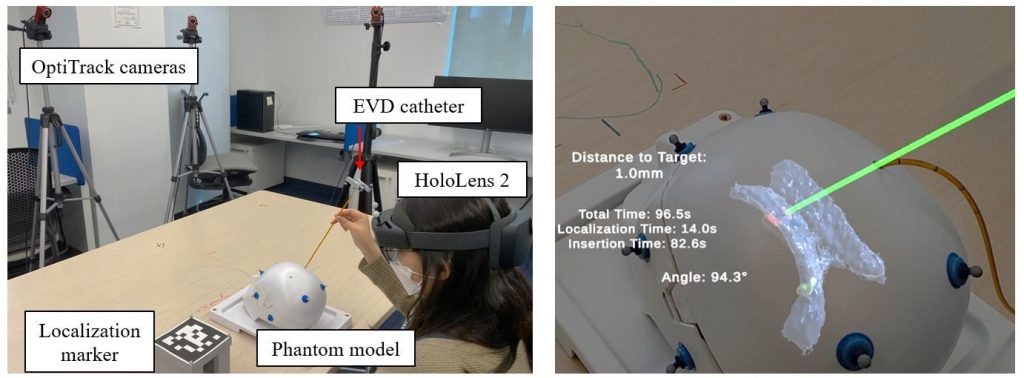

The position is best suited to a person who has experience with ML in mobile systems or pervasive sensing contexts, such as real-time human activity recognition, real-time edge video analytics, or ML-based QoS or QoE prediction. Exposure to augmented and virtual reality (e.g., Unity or Unreal, mobile AR SDKs, AR or VR QoS and QoE, V/VI-SLAM) is advantageous but not required.

If you are interested in this position, please e-mail maria.gorlatova@duke.edu with your up to date CV, transcripts, and 2 papers that you believe represent your best work.

This position can start in September 2023, January 2024, or May 2024.

Lab’s previous postdoctoral associate Dr. Guohao Lan has successfully secured an independent Assistant Professor position at a top university.

Duke University is an exceptionally selective private Ivy Plus school, with a beautiful gothic campus located in a lively progressive Raleigh-Durham area of North Carolina. It is a wonderful place to work and a wonderful place to live.