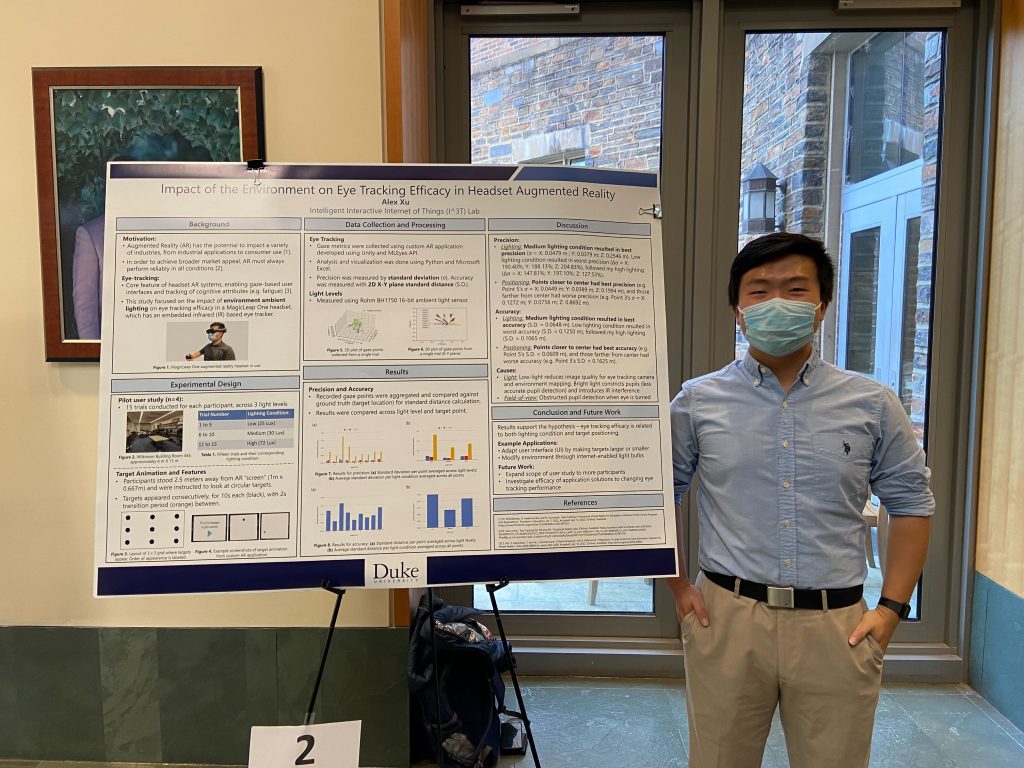

7 ECE and CS independent undergraduate research projects have been completed in the I^3T lab over the Fall of 2020. The projects are summarized below. This work is supported in part by NSF grants CSR-1903136 and CNS-1908051, IBM Faculty Award, and by the Lord Foundation of North Carolina.

Evaluating Object Detection Models through Photo-Realistic Synthetic Scenes in Game Engines

Achintya Kumar and Brianna Butler

We build an automatic pipeline to evaluate object recognition algorithms within the generated photo-realistic 3D scenes in game engines, i.e., Unity (with High Definition Render Pipeline) and Unreal. Specifically, we test the detection accuracy and intersection over union (IoU) under conditions of different lighting, reflections and transparency levels, camera or object rotations, blurring, occlusions, and object textures. In the automatic pipeline, we collect a large-scale dataset under these conditions without manual capturing, by controlling multiple parameters in game engines. For example, we control illumination conditions by changing the lux values and types of the light sources; we control reflections and transparency levels by using the custom render pipelines; and we control the texture by collecting a texture library and then randomly choosing the object texture from the texture library. Another important component of the automatic pipeline is to generate per-pixel ground truth, where the RGB value of the ground truth image indicating the corresponding object ID of each pixel. With the ground truth generation, the detection accuracy and IoU are obtained without manual labeling.

Continue reading →