In our recent work that appeared in ACM SenSys 2020, we take a close look at the detection of cognitive context, the state of the person’s mind, through users’ eye tracking.

Eye tracking is a fascinating human sensing modality. Eye movements are correlated with deep desires and personality traits; careful observers of one’s eyes can discern focus, expertise, and emotions. Moreover, many elements of eye movements are involuntary, more readily observed by an eye tracking algorithm than the user herself.

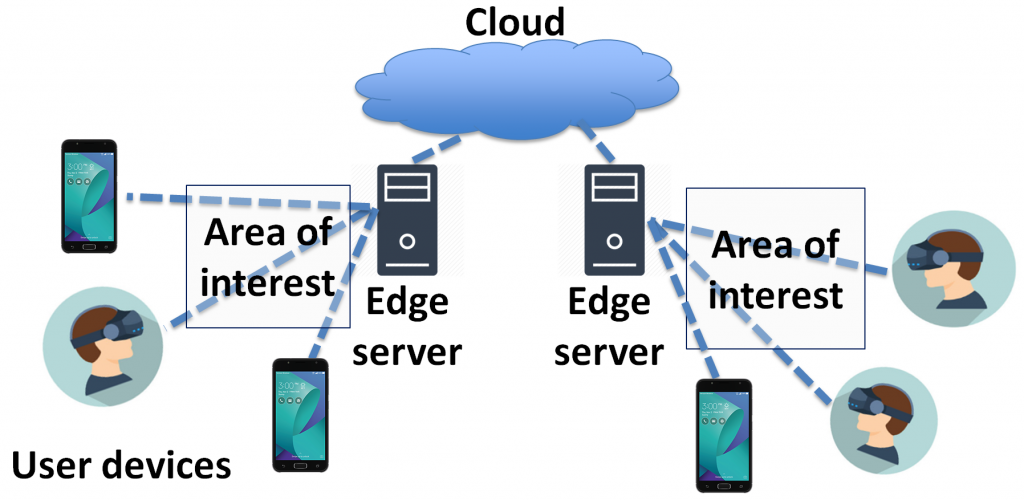

The high-level motivation for this work is the wide availability of eye trackers in modern augmented and virtual reality (AR and VR) devices. For instance, both Magic Leap and HoloLens AR headsets are integrated with eye trackers, which have many potential uses including gaze-based user interface and gaze-adapted rendering. Traditional wearable human activity monitoring recognizes what the user does while she is moving around – running, jumping, walking up and down stairs. However, humans spend significant portions of their days engaging in different cognitive tasks that are not associated with discernible large-scale body movements: reading, watching videos, browsing the Internet. The differences in these activities, indistinguishable for motion-based sensing, are readily picked up by eye trackers. Our work focuses on improving the recognition accuracy of eye movement-based cognitive context sensing, and on enabling its operation with few training instances — the so-called few-shot learning, which is particularly important due to the particularly sensitive and personal nature of eye tracking data.

Our paper and additional information:

- G. Lan, B. Heit, T. Scargill, M. Gorlatova, GazeGraph: Graph-based Few-Shot Cognitive Context Sensing from Human Visual Behavior, in Proc. ACM SenSys’20, Nov. 2020 (20.6% acceptance rate). [Paper PDF] [ Dataset and codebase ] [ Video of the talk ]