Our paper on image recognition for mobile augmented reality in the presence of image distortions appeared in IEEE/ACM IPSN’20 and received the conference’s Best Research Artifact Award [ Paper PDF ] [ Presentation slides ] [ Video of the presentation ]

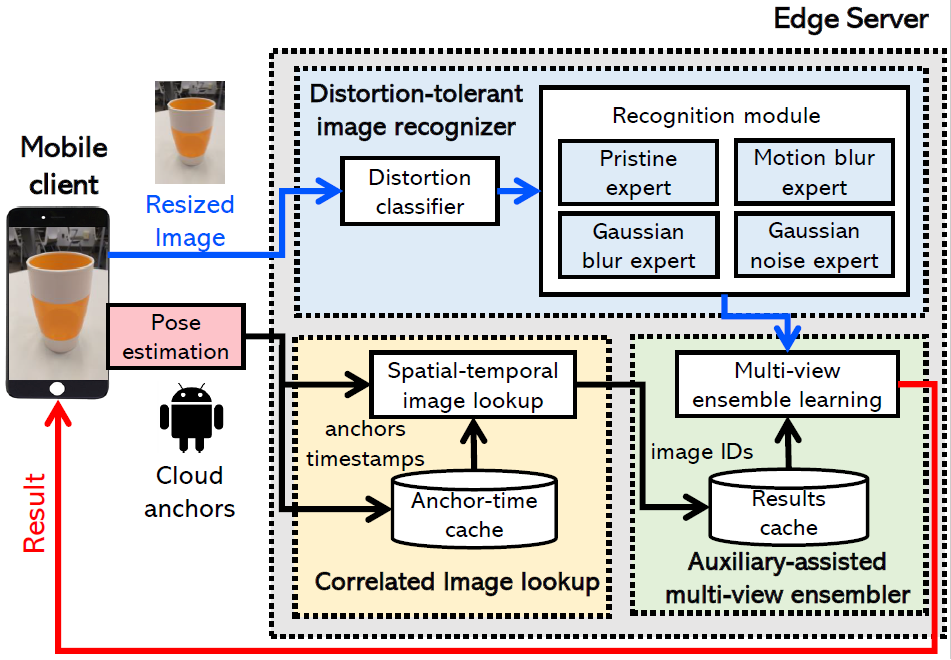

This work is motivated by the unavoidable presence of image distortions, such as motion blur and Gaussian noise, in practical mobile augmented reality systems. A large body of work that deals with mobile-captured images assumes that distorted images can be filtered out. However, our study of realistic augmented reality scenarios demonstrated the pervasiveness of distortions: for example, a user wearing a Magic Leap One headset in an indoor corridor in our engineering building would have 99.6% of the images captured by a Magic Leap One camera corrupted by motion blur. We thus developed a solution, CollabAR, whose architecture is shown above. CollabAR runs on an edge server. CollabAR (1) analyses images to detect whether they are corrupted by noise, (2) uses a collection of “experts,” optimized to perform well with specific types of noise, to perform image recognition, (3) takes advantages of the potential presence of temporally and spatially related images to further improve recognition results. CollabAR achieves over 96% recognition accuracy for images that contain severe multiple distortions, while achieving the end-to-end system latency as low as 17.8 ms.

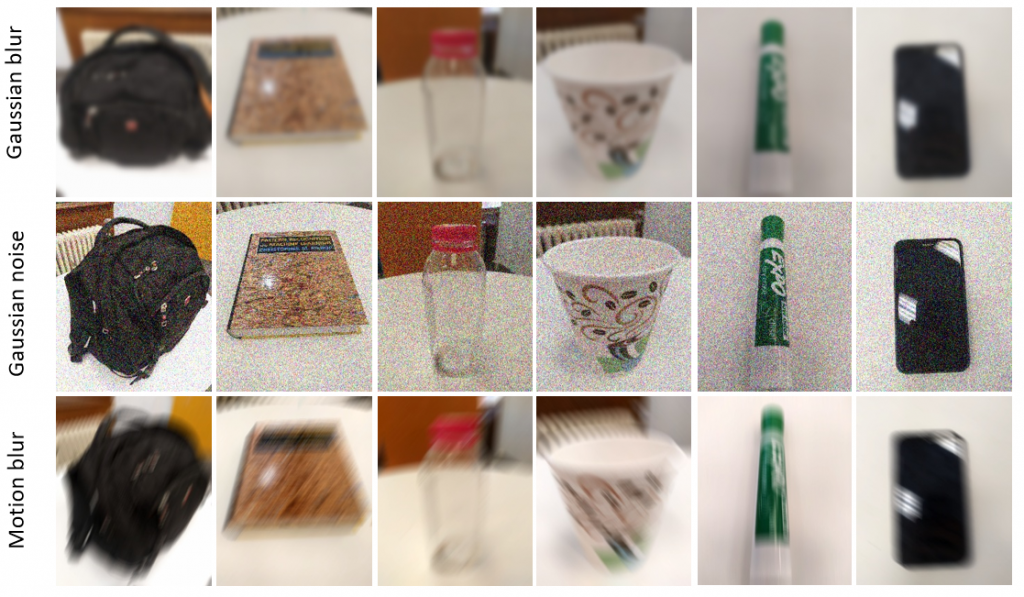

Examples of distorted images in the MVMDD dataset we collected as part of this work.

Examples of distorted images in the MVMDD dataset we collected as part of this work.

Our IEEE/ACM IPSN paper describing CollabAR is accompanied by an open-source repository that includes the examples of real-world distortions we observed in realistic mobile augmented reality systems, the new multi-view dataset we have collected, the code we developed to generate distortions, and the source code for the core pieces of the CollabAR framework.

- Paper PDF

- Presentation slides

- Video of the presentation — presented by Zida Liu

- Open-source code – Winner: ACM/IEEE 2020 Best Research Artifact Award. Award given to “the authors who have contributed the research artifact that is judged to be most novel, easy to use, well documented, and useful to advance research”.

This work is supported in part by NSF grants CSR-1903136 and CNS-1908051 and by the Lord Foundation of North Carolina.