This summer we were fortunate to be able to virtually host 3 Research Experience for Undergraduates (REU) students in the I^3T Lab, through the Duke University REU Site for Meeting Grand Challenges in Engineering. The research students were engaged in is supported in part by NSF grants CSR-1903136, CNS-1908051, and CAREER-2046072, and by an IBM Faculty Award.

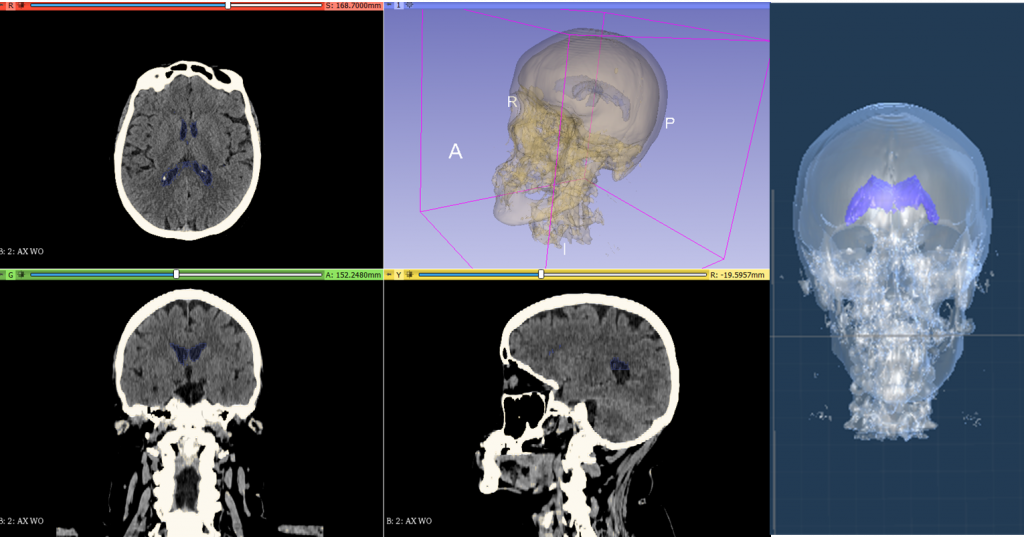

Emily Eisele, who visited us from Widener University and who worked with Sarah Eom, worked on implementing image registration for augmented reality-assisted neurosurgery, which we have been examining jointly with Dr. Shervin Rahimpour, an Assistant Professor of Neurosurgery at the University of Utah. Emily extracted 3D model of ventricles with a skull frame from a patient’s CT scan on 3D Slicer software, developed an Android AR app using ARCore to align the hologram of the ventricle model onto a phantom human head using an Iterative Closest Point (ICP) algorithm, and evaluated the method by analyzing the results of ICP and distribution of the centroid of holograms. Her work has brought us closer to enabling robust high-precision augmented reality assistance for surgical procedures.

Extraction of a 3D model of ventricles from a CT scan and the alignment of the extracted model with a phantom human head for a mobile AR application.

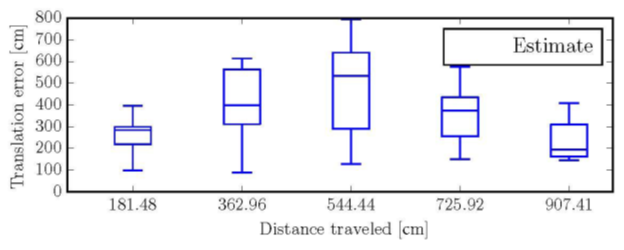

Maria Christenbury, who visited us from Clemson University and who worked with Ying Chen, worked on Unreal Engine-based pose error measurement for AR SLAM. She created blueprint code in Unreal to automatically move the camera and get high-resolution RGB-D images for every frame, and measured the performance of ORB-SLAM 2 algorithm with synthetic scenes in Unreal. We are currently expanding on Maria’s work to measure AR SLAM performance for a wider range of synthetic scenes, AR viewport movement trajectories within the scenes, and SLAM algorithms.

Measuring the performance of ORB-SLAM 2 on Unreal engine-based indoor scenes.

Megan Mott, who visited us from UNC Chapel Hill, and who worked with Tim Scargill, worked on improving environment understanding in smartphone augmented reality, specifically more accurate detection of empty areas on planes (e.g., a table top). She evaluated existing plane boundary inaccuracy on Google’s ARCore, and developed an AR app to transmit RGB and depth images captured of the environment to an edge server. She wrote a script (using Python and OpenCV) to detect object boundaries and return results to the AR device, achieving end-to-end latency of less than 1 second. This work has demonstrated how computer vision tasks related to environment understanding can be performed at sufficiently low latency on an edge server, to facilitate more realistic AR interactions. We are expanding on this work in continuing research into edge-based AR environment characterization.