7 ECE and CS independent undergraduate research projects have been completed in the I^3T lab over the Fall of 2020. The projects are summarized below. This work is supported in part by NSF grants CSR-1903136 and CNS-1908051, IBM Faculty Award, and by the Lord Foundation of North Carolina.

Evaluating Object Detection Models through Photo-Realistic Synthetic Scenes in Game Engines

Achintya Kumar and Brianna Butler

We build an automatic pipeline to evaluate object recognition algorithms within the generated photo-realistic 3D scenes in game engines, i.e., Unity (with High Definition Render Pipeline) and Unreal. Specifically, we test the detection accuracy and intersection over union (IoU) under conditions of different lighting, reflections and transparency levels, camera or object rotations, blurring, occlusions, and object textures. In the automatic pipeline, we collect a large-scale dataset under these conditions without manual capturing, by controlling multiple parameters in game engines. For example, we control illumination conditions by changing the lux values and types of the light sources; we control reflections and transparency levels by using the custom render pipelines; and we control the texture by collecting a texture library and then randomly choosing the object texture from the texture library. Another important component of the automatic pipeline is to generate per-pixel ground truth, where the RGB value of the ground truth image indicating the corresponding object ID of each pixel. With the ground truth generation, the detection accuracy and IoU are obtained without manual labeling.

Gaze-based Cognitive Context Recognition with Deep Neural Networks

Hunter Gregory and Steven Li

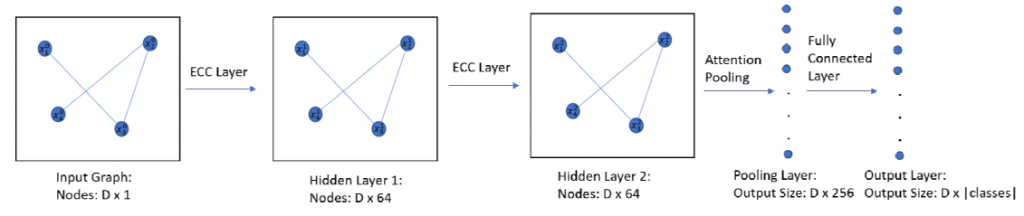

In this project, we are investigating the performance of different deep neural network (DNN) models on gaze-based cognitive context sensing. Different from the convolutional neural network (CNN)-based classifier introduced in our ACM SenSys’20 GazeGraph work, we have designed five new DNN models based on vanilla Long short-term memory (LSTM), bidirectional LSTM, attention-based LSTM, transformer, and graph convolutional neural network (GCN), respectively. Our results indicate that the GCN-based classifier, which can efficiently capture the spatial-temporal information embedded in GazeGraph (a graph-based data structure), achieves the highest recognition accuracy. Future work can be done in combining GazeGraph with GCN on more complex gaze-based sensing tasks.

In this project, we are investigating the performance of different deep neural network (DNN) models on gaze-based cognitive context sensing. Different from the convolutional neural network (CNN)-based classifier introduced in our ACM SenSys’20 GazeGraph work, we have designed five new DNN models based on vanilla Long short-term memory (LSTM), bidirectional LSTM, attention-based LSTM, transformer, and graph convolutional neural network (GCN), respectively. Our results indicate that the GCN-based classifier, which can efficiently capture the spatial-temporal information embedded in GazeGraph (a graph-based data structure), achieves the highest recognition accuracy. Future work can be done in combining GazeGraph with GCN on more complex gaze-based sensing tasks.

Measuring Resource Consumption in Mobile AR in the Context of a Virtual Art Gallery

Orion Hsu

Augmented reality applications are resource-intensive due to the need to simultaneously map the environment, track the user’s location within it, and render 3D virtual objects. Here we studied how various factors on the rendering side (number of virtual objects, object scale and complexity, shadow quality) affect resource consumption (CPU and memory utilization) and the resulting user experience (frame rate). We examined this in the context of a virtual art gallery, a real-world use case in which it would be desirable to render multiple complex virtual objects, such as detailed sculptures. Our experiments reveal that there are significant potential savings to be made, which can inform both the design of AR experiences and runtime adjustments based on the current state of the mobile device.

Augmented reality applications are resource-intensive due to the need to simultaneously map the environment, track the user’s location within it, and render 3D virtual objects. Here we studied how various factors on the rendering side (number of virtual objects, object scale and complexity, shadow quality) affect resource consumption (CPU and memory utilization) and the resulting user experience (frame rate). We examined this in the context of a virtual art gallery, a real-world use case in which it would be desirable to render multiple complex virtual objects, such as detailed sculptures. Our experiments reveal that there are significant potential savings to be made, which can inform both the design of AR experiences and runtime adjustments based on the current state of the mobile device.

Impact of Inertial Data on Mobile AR Hologram Drift

Priya Rathinavelu

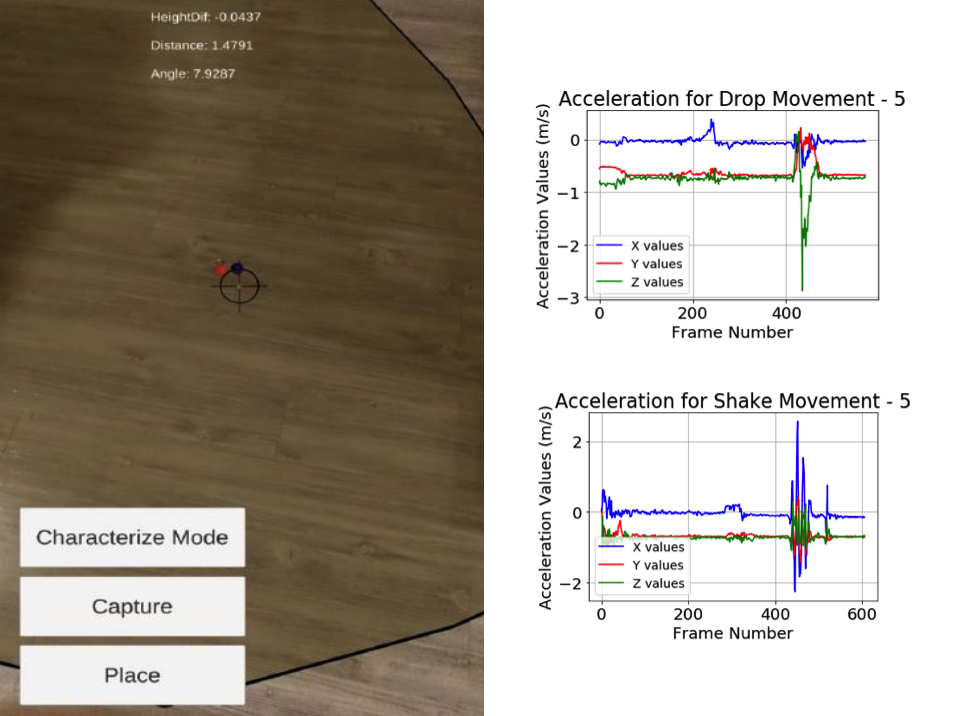

A common problem in mobile AR is hologram drift, where a hologram moves out of position from where it was originally placed. This is caused by errors in tracking the pose of the device, accomplished by a Visual-Inertial SLAM (Simultaneous Localization and Mapping) algorithm. In our previous and ongoing work, we have been examining the relationship of the drift with the visual data. An additional key factor in these errors is the inertial data inputs (the accelerometer and gyroscope readings); some types of device movement present significantly more challenges than others. In this project we developed a custom AR application to capture both accelerometer data and drift, and tested it with a variety of common movements. We then explored how a series of accelerometer readings could be characterized, and the relationship with the drift that occurs.

Characterizing the Statistics of Virtual Reality Viewport Pose

Ashley (Hojung) Kwon

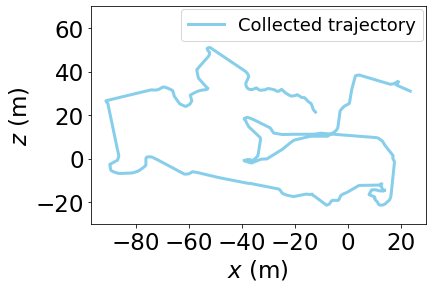

We focus on collecting and modeling the statistics of the viewport pose in VR systems. Due to the lack of publicly available datasets, we first collect the viewport pose dataset by implementing desktop VR systems using three VR games from the Unity store. The participants explore the VR scenes freely, using arrow keys and the mouse to control the translational and rotational viewport movement. In the meantime, our system collects the viewport trajectory by recording the viewport position and orientation of each VR frame. In total, we obtain the viewport trajectory record of 240 minutes. After the data collection, we extract from the dataset the distributions of the translational and rotational viewport movement, as well as the correlation between them.