We are presenting at 3 CPS-IoT Week sessions this week:

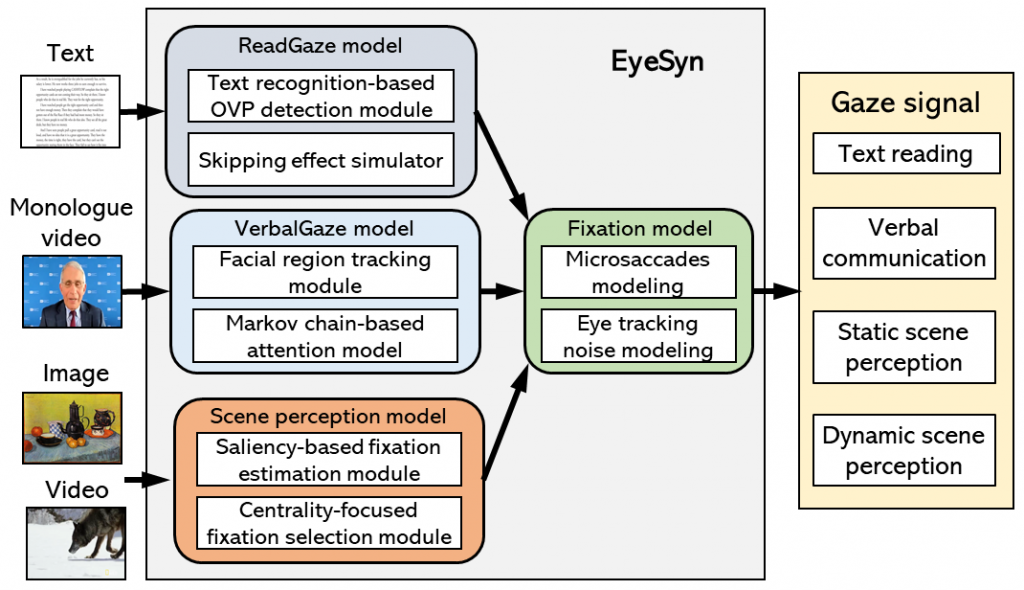

IPSN’22: EyeSyn: Psychology-inspired Eye Movement Synthesis for Gaze-based Activity Recognition presents the first method for synthesizing eye movement data for training eye movement-based activity classifiers for AR and VR without human involvement. [PDF] [Code and data] [NSF Discoveries news item covering this work]

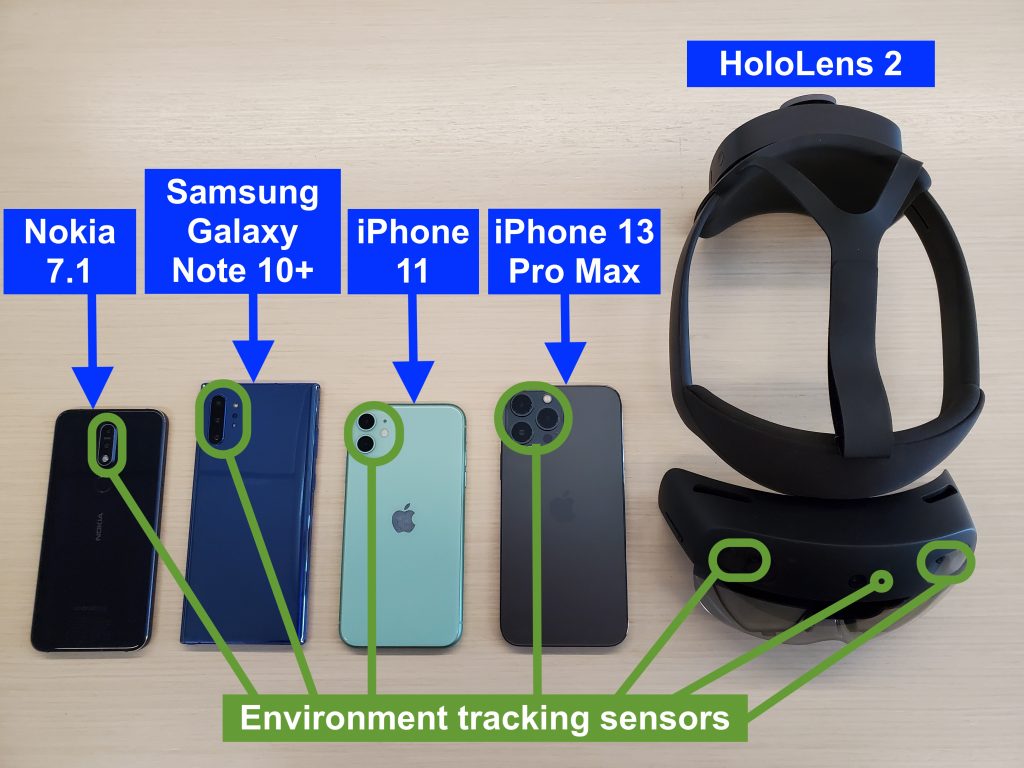

Workshop on Cyber-Physical-Human System Design and Implementation (CPHS): Here To Stay: A Quantitative Comparison of Virtual Object Stability in Markerless Mobile AR presents cross-platform measurements of AR virtual object displacements observed across a wide range of environments and user actions. [PDF]

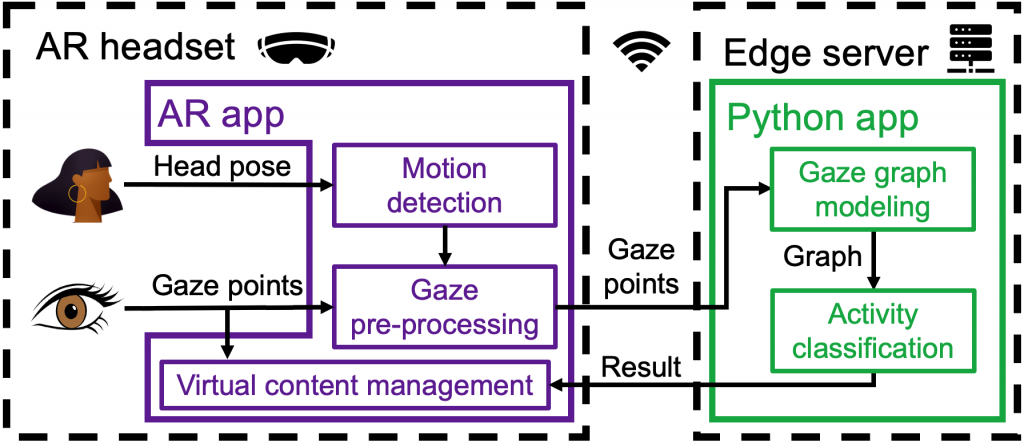

IPSN’22 demo session: Catch My Eye: Gaze-Based Activity Recognition in an Augmented Reality Art Gallery presents the first system that incorporates DNN-based activity recognition from user gaze into a realistic mobile AR app. [PDF] [Video of the demo]