5 independent undergraduate research projects have been completed in the I^3T lab this semester. In these projects students investigated different elements of mobile augmented reality (AR), including edge-based integration of AR with low-end IoT devices, user perception of different types of shadows, and mechanisms for multi-user coordination for mobile AR. 4 projects are highlighted below.

This work is supported in part by NSF grants CSR-1903136 and CNS-1908051, and by the Lord Foundation of North Carolina.

Semantic Understanding of the Environment for Augmented Reality

Joseph DeChicchis

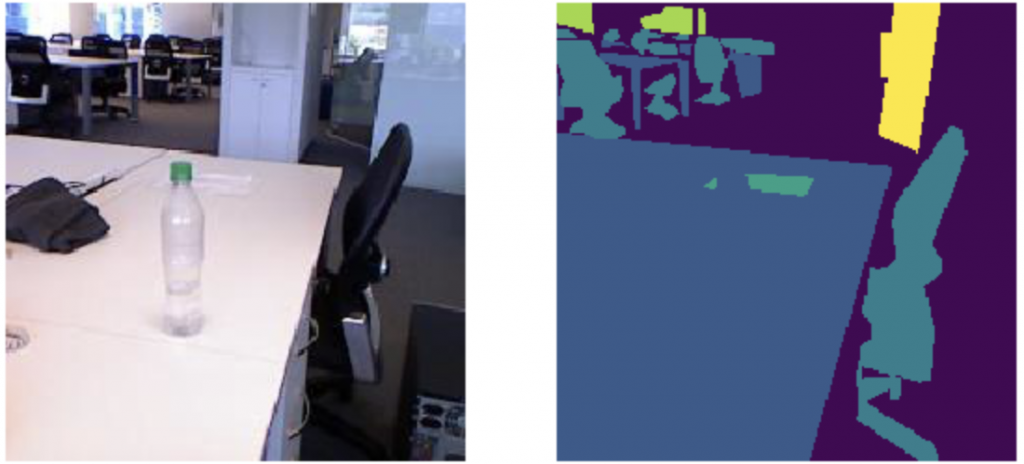

In this research project, we are investigating how to enhance augmented reality (AR) experiences by incorporating an understanding of the environment in AR. In particular, we are focusing on semantic scenic understanding using computer vision to understand what objections are present in a user’s environment. We are working on developing and training a segmentation model on indoor scene data and deploying it on an edge server. The final deployment will consist of a Magic Leap One AR device, an edge server, and the Intel RealSense RGB-D camera. The camera image will be used to extract semantic information of the scene and this information will be sent to the Magic Leap One for rendering.

In this research project, we are investigating how to enhance augmented reality (AR) experiences by incorporating an understanding of the environment in AR. In particular, we are focusing on semantic scenic understanding using computer vision to understand what objections are present in a user’s environment. We are working on developing and training a segmentation model on indoor scene data and deploying it on an edge server. The final deployment will consist of a Magic Leap One AR device, an edge server, and the Intel RealSense RGB-D camera. The camera image will be used to extract semantic information of the scene and this information will be sent to the Magic Leap One for rendering.

Augmented Reality Digital Twin for an Internet-of-Things System

Michael Zhang

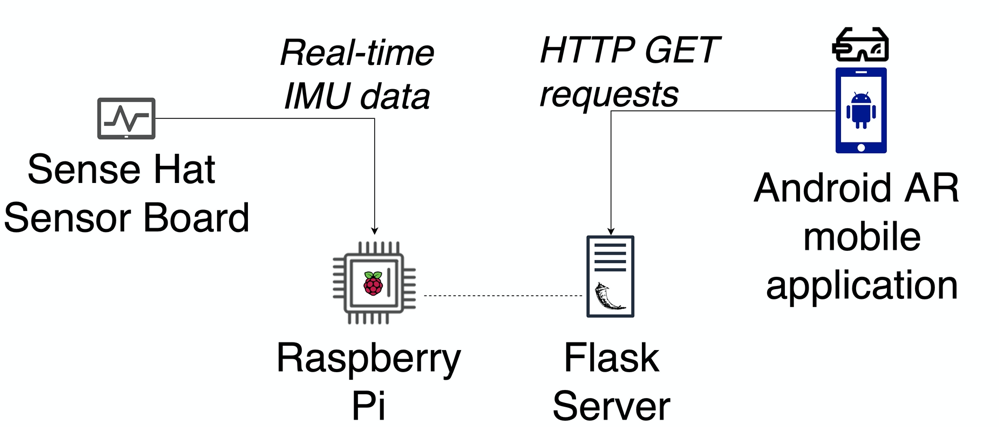

Internet-of-Things (IoT) devices gather data to perform a variety of actions. These devices either process data locally or offload the processing to more powerful devices. The increase in “smart” devices in the consumer space paves way for us to investigate their applications in augmented reality. IoT devices could extend the mobile AR experience by providing additional sources of data that better contextualize the user’s environment. To explore the nascent intersection of mobile AR and IoT, the digital twin proof-of-concept lets an AR application interact with a nearby IMU.

Michael demonstrating his work at a Duke University ECE Department undergraduate research showcase.

Michael demonstrating his work at a Duke University ECE Department undergraduate research showcase.

Deployed on a smartphone, the AR application displays a hologram that mimics the orientation of a nearby device (with an IMU). This digital twin idea allows the hologram to be more representational of the surroundings (ex. 2D hologram reflecting a 3D object), or act as an end-point controlled by a remote (ex. external device controlling a 2D hologram).

Performance Trade-offs of Dynamic Shadows for Augmented Reality

Davis Booth

We have focused on utilizing user research and device performance analysis to understand which features of augmented reality pose the largest energy burdens on devices, and which of these features can be improved or forgone to optimize user experience. This semester in particular, we found that shadow calculation were computationally expensive for iOS devices. As a result, we set out to reduce the total energy spent by devices to calculate shadows, which we successfully did by significantly reducing the quality of shadows for an application called SwiftShot™ (developed by Apple). This resulted in significantly less required compute power for iPhones. Finally, we conducted a user study comparing a build of SwiftShot™ with high quality shadows to a build with low quality shadows. Users from this study confirmed that they noticed no difference in shadows, but, interestingly, also consistently asserted that they noticed improved speed for the low resolution shadow build. These results indicate that the absence of dynamic shadows, which we originally believed to be potentially detrimental to user experience, may actually have a positive impact on it.

We have focused on utilizing user research and device performance analysis to understand which features of augmented reality pose the largest energy burdens on devices, and which of these features can be improved or forgone to optimize user experience. This semester in particular, we found that shadow calculation were computationally expensive for iOS devices. As a result, we set out to reduce the total energy spent by devices to calculate shadows, which we successfully did by significantly reducing the quality of shadows for an application called SwiftShot™ (developed by Apple). This resulted in significantly less required compute power for iPhones. Finally, we conducted a user study comparing a build of SwiftShot™ with high quality shadows to a build with low quality shadows. Users from this study confirmed that they noticed no difference in shadows, but, interestingly, also consistently asserted that they noticed improved speed for the low resolution shadow build. These results indicate that the absence of dynamic shadows, which we originally believed to be potentially detrimental to user experience, may actually have a positive impact on it.

Designing and Developing Systems for Multi-device Image Recognition

Jason Zhou

In this project, we have designed and developed a model to detect overlapping fields of view for 2 or more devices. This allows multiple edge-connected devices to collaborate on image recognition tasks when they are capturing the same object to improve the accuracy of image recognition. Using Anchors from Google’s ARCore library, we were able to associate the coordinate systems of the user devices together. Additionally, we modeled the field of view of a device’s camera as a square frustum and used the separating axis theorem (SAT) to detect real-time collisions between 2 viewing frustums. Further work can be done to optimize the parameters, such as the near and far clipping plane distance, as well as to explore alternative ways of calculating the vertices of the viewing frustums.