9 ECE and CS independent undergraduate research projects on next-generation AR and VR were completed in the lab over this semester. This work is supported in part by NSF grants CSR-1903136, CNS-1908051, and CAREER-2046072, and by an IBM Faculty Award.

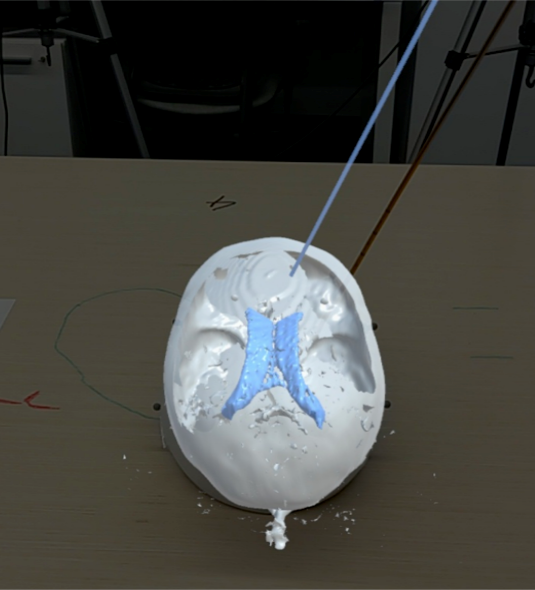

AR-assisted Neurosurgery

Seijung Kim

Experimental setup (left), and the view of holograms overlaid in AR (right)

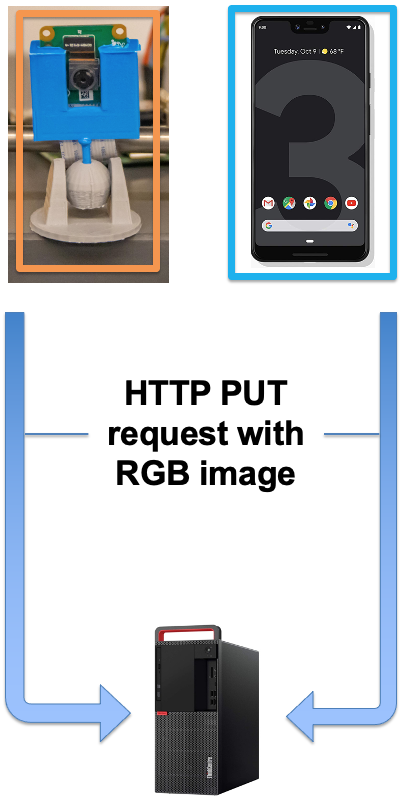

IoT Camera-Aided AR Environment Characterization

Achilles Dabrowski

The stability of virtual objects in augmented reality (AR) environments is dependent on the properties of the real world environment, such as the amount of light or visible textures. In previous work we explored how these properties may be detected from the camera image of an augmented reality device. However, it is desirable that AR environments can also be characterized, and their properties monitored, without an AR device, so that optimal conditions can be maintained or information sent to AR devices ahead of time.

The stability of virtual objects in augmented reality (AR) environments is dependent on the properties of the real world environment, such as the amount of light or visible textures. In previous work we explored how these properties may be detected from the camera image of an augmented reality device. However, it is desirable that AR environments can also be characterized, and their properties monitored, without an AR device, so that optimal conditions can be maintained or information sent to AR devices ahead of time.

In this project we explored how a low-cost camera (a Raspberry Pi Camera v2), held in a 3D-printed mount, can be used in an edge computing architecture to capture and characterize images of an AR environment. We conducted experiments to assess how environment characterization metrics differ when captured on a Raspberry Pi camera and a Google Pixel 3 smartphone, varying both light level and visible textures. We established that the proposed technique is a valid method of environment characterization, but that camera image resolution, smartphone camera exposure adjustments and camera pose similarity are important factors to consider. This project provides a foundation for upcoming submissions on automatic AR environment optimization, with future work incorporating the control of other Internet of Things devices such as smart lightbulbs.

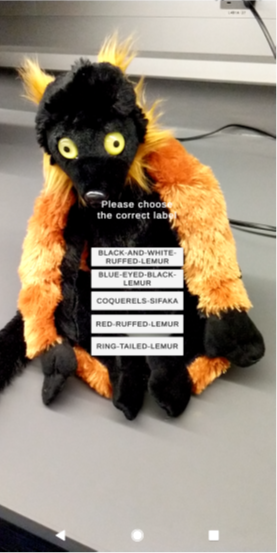

AR-based labeling for DNN fine tuning

Ashley (Hojung) Kwon

Ashley (Hojung) Kwon worked on AR-based labeling for DNN fine tuning. She built a smartphone-based system where a user can use AR interfaces to generate labels for DNN-based lemur species detection. She first collected a lemur image dataset, which include images from YouTube videos showing lemurs in the wild or in enclosures, and photos from Duke Lemur Center where the view of lemurs is obstructed by metal bars, meshes, or protective glasses of lemur enclosures. With the collected dataset, she fine-tuned a Faster RCNN model to detect different species of lemurs. In the smartphone-based system Ashley built, the fine-tuned Faster RCNN model suggests labels of lemurs for the captured frame, and the user can either confirms or corrects the label through AR interfaces. The captured lemur images and the generated labels are then used to further fine-tune the Faster RCNN model to increase the detection accuracy. This work has demonstrated that AR interfaces can be used to address the challenges of time-consuming and laborious manual object labeling in training DNN networks.

Ashley (Hojung) Kwon worked on AR-based labeling for DNN fine tuning. She built a smartphone-based system where a user can use AR interfaces to generate labels for DNN-based lemur species detection. She first collected a lemur image dataset, which include images from YouTube videos showing lemurs in the wild or in enclosures, and photos from Duke Lemur Center where the view of lemurs is obstructed by metal bars, meshes, or protective glasses of lemur enclosures. With the collected dataset, she fine-tuned a Faster RCNN model to detect different species of lemurs. In the smartphone-based system Ashley built, the fine-tuned Faster RCNN model suggests labels of lemurs for the captured frame, and the user can either confirms or corrects the label through AR interfaces. The captured lemur images and the generated labels are then used to further fine-tune the Faster RCNN model to increase the detection accuracy. This work has demonstrated that AR interfaces can be used to address the challenges of time-consuming and laborious manual object labeling in training DNN networks.

Effects of Environmental Conditions on Persistent AR Content

Mary Jiang

Recent cloud-based services such as Microsoft’s Azure Spatial Anchors and Google’s Cloud Anchors facilitate the persistence of augmented reality (AR) content in a particular physical space, across both multiple users and time. These services work by first mapping the space using visual-inertial SLAM (simultaneous localization and mapping), to create a reference point cloud; a hash of the visual characteristics of each point are transmitted to the cloud to form the ‘anchor’. In a future AR session, a user can then transmit data about their point cloud to the service in order to compare it with the reference, resolve the anchor, and position AR content correctly. However, given that the point clouds are based on visual characteristics of the environment, properties such as light level and visible textures can affect both the accuracy and latency of resolving an anchor.

In this project we examined the effect of environmental conditions on the performance of Microsoft’s Azure Spatial Anchors service. We developed a method for measuring anchor resolve time in a custom AR app, and implemented our previously developed method of edge-based environment characterization. We conducted experiments in 15 diverse indoor and outdoor environments across Duke University campus, as well as a separate set of experiments with different light levels. We also incorporated a qualitative assessment of anchor localization accuracy. Our results show that low light levels dramatically increase anchor resolve time, and that in normal light conditions, long resolve times may occur when AR device camera images contain low edge strength or detectable corners. This work provides valuable insights into the suitability of different spaces for persistent AR content, and further motivation for optimizing those environments. It will support future work on how data from multiple users in persistent AR environments, over longer time periods, can be combined and analyzed.

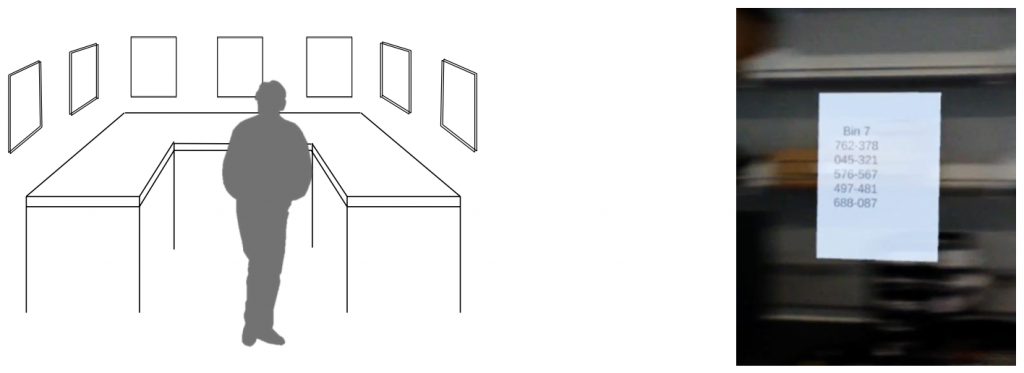

User Fatigue Detection in Augmented Reality using Eye Tracking

Alex Xu

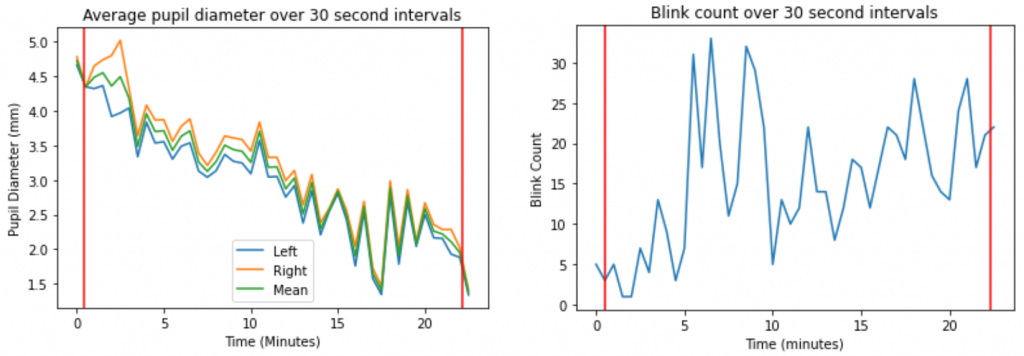

With commercial AR devices becoming more commonplace in industrial, medical, and educational settings, it is important to understand how cognitive attributes such as fatigue and mental workload may be detected in AR users, especially given that those factors can affect task performance. Previous work in the eye tracking community has established detection methods for 2D video stimuli with specialized eye tracking devices, but there has been little research conducted on AR settings and devices. Facilitated by the recent addition of pupil diameter to the Magic Leap One AR headset eye tracking API, here we build on our previous work on gaze-based activity recognition to develop a fatigue detection method on the Magic Leap One.

With commercial AR devices becoming more commonplace in industrial, medical, and educational settings, it is important to understand how cognitive attributes such as fatigue and mental workload may be detected in AR users, especially given that those factors can affect task performance. Previous work in the eye tracking community has established detection methods for 2D video stimuli with specialized eye tracking devices, but there has been little research conducted on AR settings and devices. Facilitated by the recent addition of pupil diameter to the Magic Leap One AR headset eye tracking API, here we build on our previous work on gaze-based activity recognition to develop a fatigue detection method on the Magic Leap One.

After developing a custom C# script to extract relevant eye tracking features from an AR users (blink rate, blink duration and pupil diameter), we conducted experiments to measure the accuracy and precision of eye movement data collection in different light conditions. We identified an appropriate use case, a warehouse stock checking task, and designed and developed a custom AR app to simulate this. We then designed and conducted an IRB-approved user study to measure eye movements, user-reported fatigue, and task performance. Our results show that increased fatigue is correlated with a decrease in pupil diameter and an increase in blink rate and duration, setting the stage for future work on developing a real-time fatigue detection system for AR. We will also build on this work to investigate the detection of other cognitive attributes in a similar manner, such as mental workload or engagement.

Examining Edge-SLAM

Aining Liu

Aining worked on the implementation and measurement of the edge-assisted SLAM system. She installed an open-sourced edge-assisted SLAM system, Edge-SLAM, on two lab desktops (one desktop served as an edge and one as a client). Some intensive tasks of the SLAM algorithm are wirelessly offloaded from the client to the edge, and three TCP links are established for the communications of frames, keyframes, and maps between the client and the edge. She measured the performance of Edge-SLAM to examine the impact of wireless network conditions on the data transmission latency of the three TCP connections and the accuracy performance of Edge-SLAM. Her work raises our attention to the bottlenecks and limitations of the edge-assisted SLAM system. Future work could build on it to optimize edge-assisted SLAM system when the wireless network is constrained.

Semantic-visual-inertial SLAM

Rohit Raguram

Rohit worked on incorporating semantic information to open-source VI-SLAM system (ORB-SLAM3) to build a semantic-visual-inertial SLAM (SVI-SLAM) system. He first performed image segmentation with PointRend model and the PixelLib library, detecting dynamic object classes (such as people and cars) in the ORB-SLAM3 visual inputs. After the image segmentation, he blurred the pixels associated with dynamic objects and their adjacent pixels. In the way, ORB-SLAM3 extracts less feature points associated with the dynamic objects, which reduces the negative impact of dynamic objects in VI-SLAM system. We are expanding on this work in continuing research into synthesizing semantic and spatial information to improve the performance of VI-SLAM.

VR viewport movement in room-scale VR

Sasamon Omoma

Sasamon worked on characterizing VR viewport movement in room-scale VR. Expanding the lab’s previous work on headset VR where the users control the navigation with controllers, she analyzed statistics of the viewport orientation in a new context of headset VR with the real walking navigation in the room-scale VR; these models are built from a public VR navigation dataset. She also built a room-scale VR system for future large-scale user study of the VR with a real walking navigation. In the system, she implemented a snap turn-like redirected walking technique to create a simple virtual-to-physical-world mapping. Both the analysis of public dataset and the initial small-scale data collections in the implemented system confirm that the flight time distribution for the user navigation is well fit by the pose model proposed in the previous work.

Digital markers for pervasive AR

Vineet Alaparthi

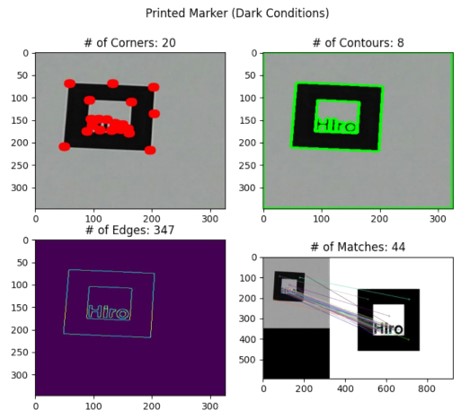

Hologram overlaid on the E-Ink marker (left) and testing of various CV algorithms including corner, contour, edge detections, and template matching used in marker detections (right).

Vineet developed an algorithm for the adaptation of a dynamic marker for enhancing the AR marker detection rate in different environmental conditions. He first explored the use of various types and sizes of fiducial markers in AR applications and set a communication pipeline from the E-Ink display to HoloLens 2. Then he explored marker detection rates in different environmental conditions by using various CV algorithms. As a final demo, he created a proof-of-concept algorithm to adapt a digital marker in poor environmental conditions for a better detection rate in AR. Vineet will continue working on the project to improve the algorithms he has developed, incorporate the adaptation algorithm into HoloLens 2, and evaluate the performance of his algorithms in different environmental conditions in real-time.

Vineet’s presentation of his work in Fall 2021 ECE poster session